The 5 Minute Fact Check

Today we’re going to talk about how to fact check an article, different methods for doing so, and why 'fighting' disinformation isn't a strong framework.

Hello! Welcome to June’s Red Herring - Happy Pride Month! Today we’re going to talk about how to fact check an article and how to do it in the wild.

On the 27th, the US will have its first Presidential debate of the cycle, officially kicking off the fact-checking Olympics that is an ugly election season, which means that being able to fact check what you read is about to become even more important.

But fact checking doesn’t just show up in politics - any time you’ve ever read celebrity drama, heard about a new age spiritual practice, or read a statistic that doesn’t sound quite right it’s important to have the skills to figure out if it’s true.

It’s also important because information sharing is a social practice - it’s how we connect and show that we care about each other. Fact checking your content, your tweets, or your press releases shows diligence and a desire to prevent harm.

Today we’ll focus on two ways of fact checking and how to apply it. Finding the process that fits best for you is key, and most people use a combination of tactics.

The SIFT Method.

One of the most common fact checking methods is the SIFT Method, developed by Mike Caulfield, which focuses on slowing down the process of consuming media and taking the time to consider what you’re reading. One of the things I like about this method is that it simplifies a lot of common advice and confronts the most common reason people miss misinformation - time!

Whether you’re scrolling on Instagram or reading a reddit thread, taking a moment to pause is key. SIFT focuses on pausing and considering media upfront.

Let’s apply the SIFT Method to an incident from a couple of months ago. In December of 2023, the Economist and several other outlets ran with the following headline: One in Five Young Americans think the Holocaust is a Myth. The Economist cited their own polling data, suggesting that 20% of “young Americans” had taken to Holocaust denial.

So, let’s Stop. To start, 20% (1 in 5) is a really high number. Have you ever heard this sentiment outside of the internet? If so, what age was the person? Just at first glance, does this statistic match your understanding of the world?

If this seems fishy to you, then you’re right. Consistent data and polling on this topic has shown the Holocaust Denial is around 3% across all age groups, which can be replicated across studies. But it’s also a serious claim worth investigating, so let’s take it seriously!

Let’s Investigate the source. As stated in the headline, this is a poll from the Economist. The article includes a link to the data set. This article has since been put behind a paywall, but I took screenshots when it was first posted, and the link is still available in the paid article.

The Economist article itself states that the majority of respondents did not believe this claim, and that when taken in total, few actually agreed (7%) with the statement that the Holocaust is a myth. Even within the article itself, it starts to de-base its own claim - a jump from 20% within a single group to 7% in the total group is really suspicious.

Next, Find Better Coverage: This is the point at which you might discover the dreaded citation circle - or a type of internet click-through hole in which you always end up at the same article. In this situation, at the time the article was published almost everything led back to this single article. That’s not a good sign.

However, at the time of publication, PEW did have this article: What Americans Know About the Holocaust, and NBC had another article from (2020): Survey Finds Shocking Lack of Knowledge. Both of these do cite data - namely that 3% of people did not believe the Holocaust happened. While the article does frame the statistic as “Just 90% of respondents believed the Holocaust happened” - it’s worth noting that 90% is very high. Can you think of a similar topic that has that high of a belief rate?

But most importantly, a jump from 3% to 20% amongst young people would be substantial. If this were true, everyone would know a young person who firmly believes the Holocaust did not happen and it would be a known, common belief. There’s little to suggest this specific belief is true, although there is evidence Americans are confused by the facts of the Holocaust in some areas.

Lastly, let’s find the original source:

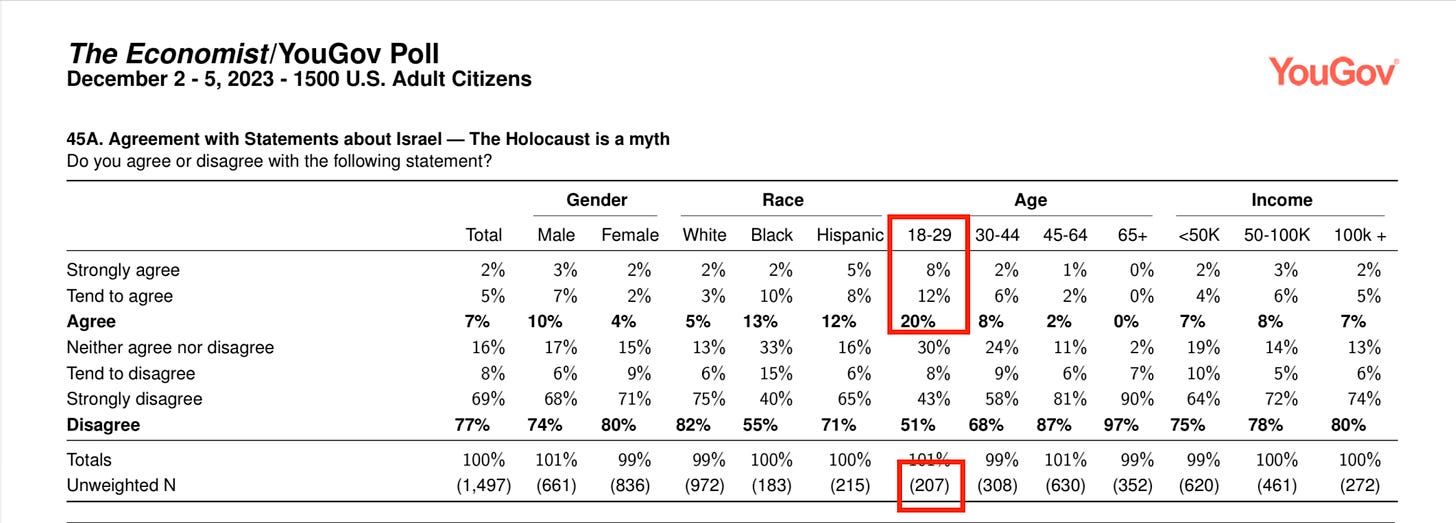

Here is the actual source of the data - a speed, opt-in poll of 1500 US adults. There’s one immediate problem: the sample size for the demographic is way too small. Only 207 respondents were between the ages of 18-29, and you’ll notice that for all age categories, it also has the smallest sample. In order for a study to be accurate, it should have a sample of at least 1,000 as an established norm - and even though the overall sample is 1,500, the sample for the question by age is only 207.

I would highly recommend this article, Key things to know about polling, from PEW Research on public polling data. PEW’s article points out that news websites conduct polls in a lot of different ways that can cause bias - especially opt-in polls like this one. It’s important to cross-check polling data with other points of reference.

So, in the end, it’s very clear that this poll is incorrect. In fact, it was so incorrect that PEW Research made headlines by correcting it. But that didn’t stop a lot of people tweeting about it during a time and giving an impression of young people as anti-semitic.

Check out this video series by Mike Caulfield on Online verification skills and fact-checking:

Other Tips for Fact Checking:

John Spencer, a Youtube Educator, lays out a framework for good media consumption called the 5 C’s of Critical Consuming: Context, Credibility, Construction, Corroboration, and Compare.

I tend to think about this method as a list of pro-tips to keep in mind online and a good addition to SIFT. While the points are pretty self-explanatory, construction (tone) and corroboration (Can you find this information in other places?) stand-out as noteworthy red-flags something is wrong. If a source has an overly positive or negative opinion of an event, group of people, or ideology, that can be a red flag. Check out this resource that explains this method in greater detail.

Another important red flag is the presence of extremely graphic, violent, or sexually explicit content. When fact checking a controversial topic, you may be met with the presence of disturbing images that’s meant to make you feel a lot of emotions. Anti-choice groups are the most famous example of this strategy and often contain images that are medically inaccurate.

Whether you’re fact-checking a claim about war, transgender issues, or anything else controversial, it’s likely you will encounter graphic images as a way to put up your mental stop sign. Before fact-checking an issue that’s likely to bring up graphic imagery, take a moment to check in with yourself.

Okay, now what?

Check out this video on Youtube of a pseudoscience expert discussing common myths. You’ll notice a couple of common themes in the way that people often debunk false claims: Directness, Authority or Credibility, and Empathy.

These three points are my personal guide to fact-checking and debunking information. Directly addressing the claim or concern rather than talking around it means addressing the myth head-on, while authority or credibility means relying on established experts. Lastly, empathy means a commitment to a genuine curiosity to learn and not immediately shutting down a claim. No one wants to feel stupid, and there’s a difference between being factually wrong and having a different opinion on a topic.

I would describe good fact-checking as a personal skill that’s central to engaging with people in the real world. It’s also a central part of being able to successfully debunk information. I suggest checking out the Debunking Handbook, which is the best current guide we have on how to debunk information. Fact checking and debunking are different, but the science of debunking can teach us about what actually works.

We know that making a genuine attempt at correcting the record requires us to provide information to others that is true and fact-driven, rather than bias driven. Replacing one myth with another isn’t helpful to anyone involved. The trick is to reframe a narrative by centering neutral information and then giving context, rather than attempting to drag someone kicking and screaming into your own personal view.

This is why I strongly disagree with language around fact-checking and disinformation that focuses on ‘fighting’ or ‘winning a war’ against disinformation. Real, meaningful fact-checking and debunking isn’t about winning anything - it’s about genuine caring and consideration for others and yourself. Sharing accurate information with others so that they can make their own choices must be a central tenet of fact-checking in order for it to be effective.

Check out this article in the Jacobin about the Environmental Impact of AI Technology and another in Bloomberg about how AI is Impacting Global Power Systems. This Vox article also covers the topic well - “AI already uses as much energy as a small country.”

Here’s a Recent Fact-Check from CNN: Yes, Washington Owned Slaves.

If you’ve never been to Snopes - one of the oldest fact checking websites around - I highly suggest it. There’s also others like PolitiFact and and FactCheck.org post-Presidential Debate.

My favorite article of the month: Google Admits Its AI Overviews Search Feature Screwed Up (Wired).

Thank you for reading Red Herring! If you liked this article, you can share and subscribe to my email list: